This sample mimics a metaball effect in screen space using a pixel shader. True metaball techniques deform surfaces according to pushing or pulling modifiers, and are commonly used to model liquid effects like the merging of water droplets. Metaball effects can be computationally expensive, however, so this sample implements a 3D metaball effect in 2D image space with a pixel shader.

| Source: | (SDK root)\Samples\C++\Direct3D\Blobs |

| Executable: | (SDK root)\Samples\C++\Direct3D\Bin\x86 or x64\Blobs.exe |

Metaballs (also called Blinn blobs after James Blinn who formulated the technique) are included with most modeling software as an easy way to create smooth surfaces from a series of enclosed volumes (called blobs) which merge together; the apparent flow between blobs makes the technique particularly well suited for particle systems which model liquids.

The underlying technique is based on isosurfaces, which are 3D equations that define a closed volume. For instance, x2 + y2 + z2 = 1 defines a sphere of radius 1 centered at the origin. This idea can be extended to arbitrary 3D functions. That is, an isosurface is defined by the set of all points along a continuous function which are equal to an arbitrary constant value. Individual metaballs are often implemented as spheres defined by Gaussian distributions where the highest value exists at the sphere's center and all values above an arbitrary threshold are defined to be inside the sphere. Subtracting the threshold from the Gaussian height gives a surface height of zero at the blob edge. Since the surface is completely defined according to this threshold, the values of overlapping spheres can be added to produce a new surface. Figure 1 shows the smooth curve which results from the addition of two Gaussian curves.

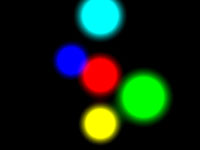

Figure 1: Addition of Gaussian curves produces smooth blending

For a true metaball effect, these calculations would be made in 3D space. Instead, this sample performs the computation in 2D screen space, which gives a nice visual effect requiring few computations. However, one limitation you may notice is that accuracy is lost when blobs in the background merge with blobs near the camera as they align along the view vector.

The individual blobs are described according to world-space position, size, and color. Before each frame is rendered, the blob positions are animated in FrameMove and then FillBlobVB projects each blob into screen-space and fills the vertex buffer with corresponding billboard quads. The vertex format of these billboards is shown below:

struct VS_OUTPUT

{

float4 vPosition : POSITION; // Screen-space position of the vertex

float2 tCurr : TEXCOORD0; // Texture coordinates for Gaussian sample

float2 tBack : TEXCOORD1; // Texture coordinates of vertex position on render target

float2 sInfo : TEXCOORD2; // Z-offset and blob size

float3 vColor : TEXCOORD3; // Blob color

};

The coordinates stored in tCurr are used to sample a 2D Gaussian texture which defines the sphere. The coordinates stored in tBack are used to sample data from previously-rendered blobs, and could also be used to blend against a background texture. These pre-transformed vertices are handed directly to the BlobBlenderPS pixel shader:

PS_OUTPUT BlobBlenderPS( VS_OUTPUT Input )

{

PS_OUTPUT Output;

float4 weight;

// Get the new blob weight

DoLerp( Input.tCurr, weight );

// Get the old data

float4 oldsurfdata = tex2D( SurfaceBufferSampler, Input.tBack );

float4 oldmatdata = tex2D( MatrixBufferSampler, Input.tBack );

// Generate new surface data

float4 newsurfdata = float4((Input.tCurr.x-0.5) * Input.sInfo.y,

(Input.tCurr.y-0.5) * Input.sInfo.y,

0,

1);

newsurfdata *= weight.r;

// Generate new material properties

float4 newmatdata = float4(Input.vColor.r,

Input.vColor.g,

Input.vColor.b,

0);

newmatdata *= weight.r;

// Additive blending

Output.vColor[0] = newsurfdata + oldsurfdata;

Output.vColor[1] = newmatdata + oldmatdata;

return Output;

}

The DoLerp helper function performs a bilinear filtered texture sample since bilinear filtering of floating-point textures is typically not implemented by the graphics driver. Each blob billboard is rendered to a set of temporary textures, one to store accumulated color data and one to store accumulated surface normal data:

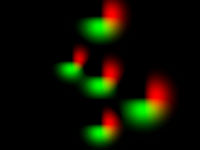

Figure 2: Surface normal buffer Figure 3: Color buffer

The blended normal and color data are used by the BlobLightPS pixel shader for a final lighting pass which samples into an environment map based on the averaged surface normal:

float4 BlobLightPS( VS_OUTPUT Input ) : COLOR

{

static const float aaval = THRESHOLD * 0.07f;

float4 blobdata = tex2D( SourceBlobSampler, Input.tCurr);

float4 color = tex2D( MatrixBufferSampler, Input.tCurr);

color /= blobdata.w;

float3 surfacept = float3(blobdata.x/blobdata.w,

blobdata.y/blobdata.w,

blobdata.w-THRESHOLD);

float3 thenorm = normalize(-surfacept);

thenorm.z = -thenorm.z;

float4 Output;

Output.rgb = color.rgb + texCUBE( EnvMapSampler, thenorm );

Output.rgb *= saturate ((blobdata.a - THRESHOLD)/aaval);

Output.a=1;

return Output;

}

Which yields the final composite image: